Unmanaged Aerial Robot Navigation for Exploring Unknown Environments Using Reinforcement Learning

This project proposes the use of reinforcement learning to create a system that allows an unmanaged drone to be able to navigate and explore an unknown environment. The drone has a predefined goal that it is attempting to reach. The complete navigation system is based on local sensor information instead of the global localization of the drone. The states of the system are based on the distance measurements from the local sensors, with the aim to detect obstacles. Global information is used to establish the direction and distance of the goal. The learning approach is based on the Q-Learning algorithm. A reward policy is used to allow the drone to avoid obstacles and pursue the goal. The complete algorithm is developed in Python and implemented using a robot simulation environment, V-Rep. The results show that the drone learns to avoid obstacles while pursuing the goal.

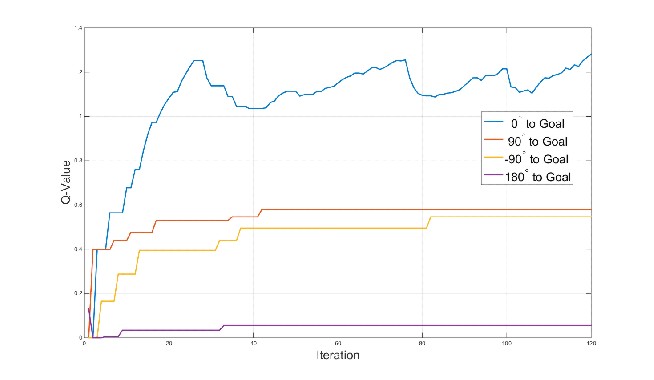

State-Action Convergence

Aerial Robot and Obstacles

Robot Navigation in Learning Process